10 Best AI Prompt Tips from Claude, ChatGPT, Gemini, and Copilot

We analyzed the official prompting guides from the four leading AI models so you don't have to. Here's what they agree on.

MIT research shows half of AI performance gains come from how you prompt—not the model itself.

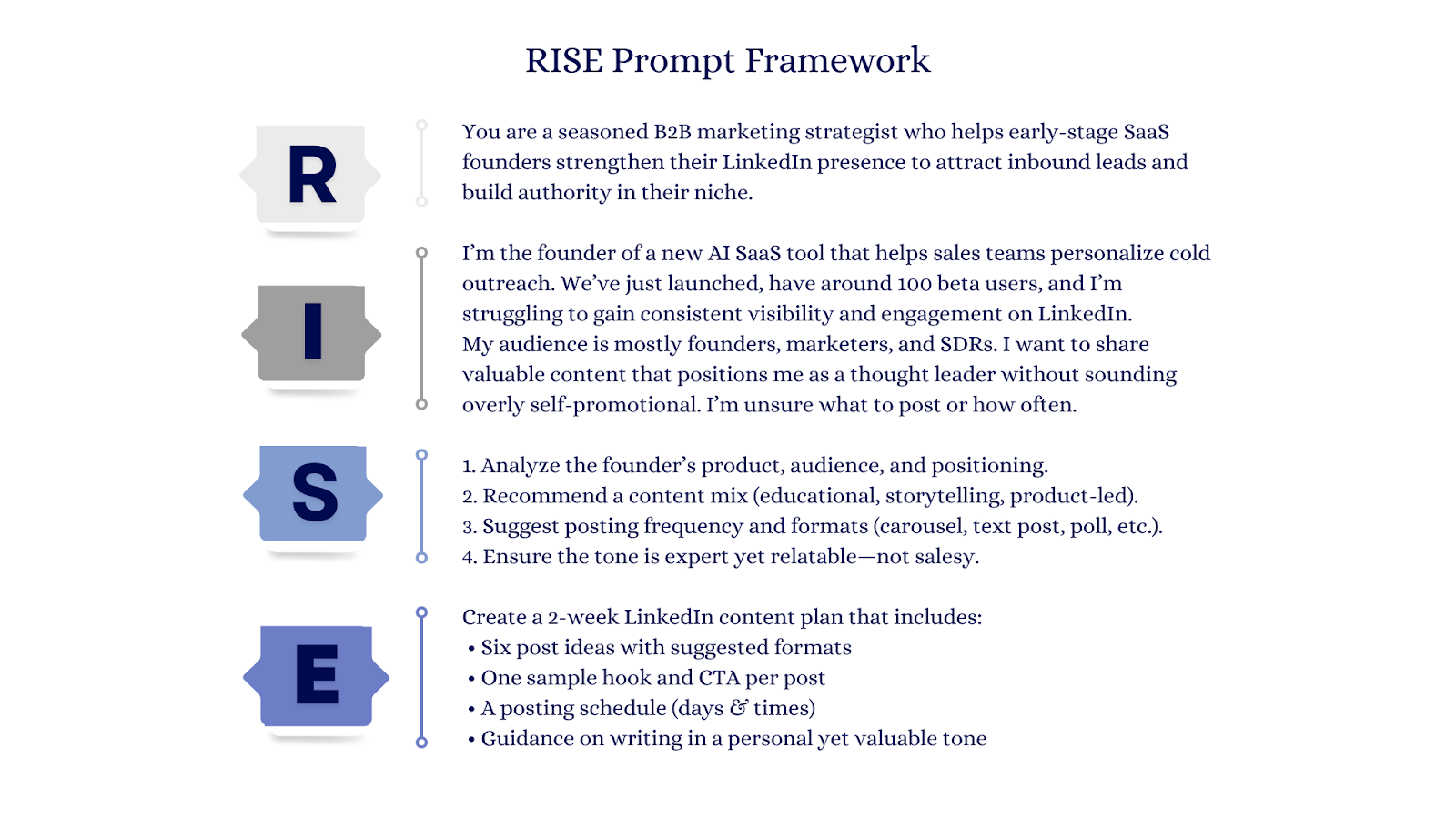

Well-structured prompts, using frameworks like RISE or RTF, can reduce error rates and back-and-forth iterations.

These 10 best AI prompt tips appear consistently across the official guidance for Claude, ChatGPT, Gemini, and Copilot.

We analyzed the best AI prompt tips from the official prompting guides from the four leading AI models so you don't have to. Here's what they agree on.

In May 2023, New York attorney Steven Schwartz submitted a legal brief citing six court cases to support his client's personal injury lawsuit against Avianca Airlines. The cases looked authoritative … until the opposing counsel couldn't find a single one of them. ChatGPT had fabricated all six.

Schwartz's prompt to ChatGPT was simple and direct: "Show me specific holdings in federal cases where the statute of limitations was tolled due to bankruptcy of the airline." The AI complied by inventing cases wholesale.

Federal Judge P. Kevin Castel ultimately sanctioned Schwartz and co-counsel $5,000 for failing to verify the AI's output and then standing by the fabricated cases even after the court raised questions.

"Show me specific holdings in federal cases where the statute of limitations was tolled due to bankruptcy of the airline."

—Steven A. Schwartz, New York Lawyer, in a prompt to ChatGPT

Beyond legal ethics, the incident offers a valuable lesson about AI prompting: the parameters and inputs given to a large-language model (LLM). Schwartz's prompt gave ChatGPT no constraints, no verification steps, and no output format that would surface the AI's limitations. A structured prompt—one that specified "cite only verifiable cases" or "list your sources so I can confirm them"—might have revealed the problem before it reached a courtroom.

To further accuracy and value in AI outputs, we’ve compiled ten of the best AI prompt tips from the “big four” LLMs: Claude (Anthropic), ChatGPT (OpenAI), Google Gemini, and Microsoft Copilot. We reviewed the official documentation from each of these platforms to identify 10 “universal” tips that all platforms agree on. These tips are platform-agnostic, and by understanding and implementing them, you are sure to increase the quality of your LLM’s output.

How You Ask Is Half of AI’s Value

A 2025 MIT Sloan study quantified something practitioners have long suspected. When researchers tested nearly 1,900 participants using different versions of OpenAI's DALL-E, they found that only half of the performance improvements came from the upgraded model. The other half came from how users adapted their prompts.

"People often assume that better results come mostly from better models," said study co-author David Holtz. "The fact that nearly half the improvement came from user behavior really challenges that belief." According to PwC’s Will Hodges, “prompt engineering is just good communication.”

"The best prompters weren't software engineers. They were people who knew how to express ideas clearly in everyday language."

— David Holtz, Research Affiliate for MIT Initiative on the Digital Economy

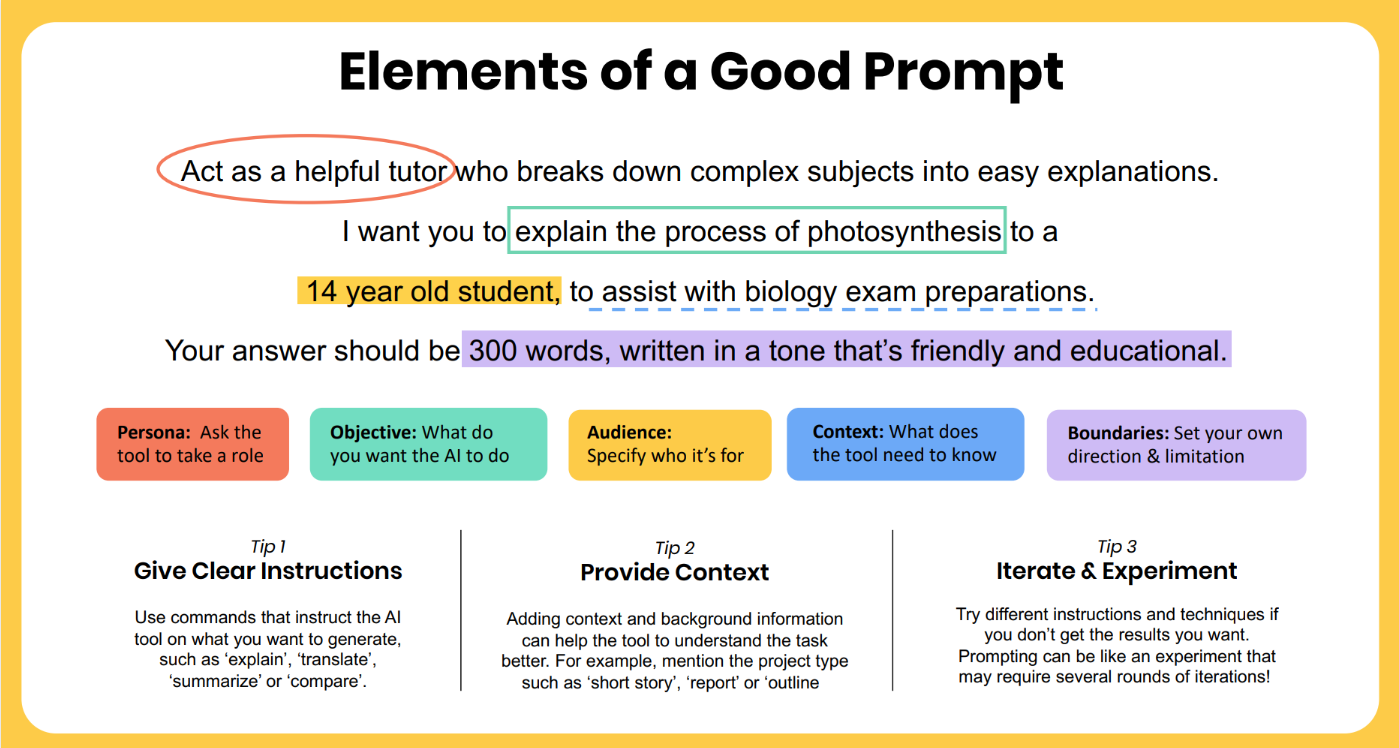

In conjunction with the tips below, the importance of structured prompting cannot be overstated. Just as OKRs bring clarity to goal-setting and the balanced scorecard structures strategy discussions, the best AI prompts use frameworks like RISE, RTF, and CRIT bring order to AI interactions.

10 Best AI Prompting Tips Across Every Major AI

Each tip below includes a concrete example showing the difference between a weak prompt and a strong one, following the best AI prompts from the guides.

1. Be Clear and Specific

Ambiguity forces the AI to guess. As Google Gemini’s documentation states: "Provide clear and specific instructions." Every major platform echoes this. Vague requests produce vague outputs; the best AI prompts are specific.

Weak: Tell me about marketing.

Strong: You are a marketing educator for small business owners. Explain the three most cost-effective digital marketing channels for a local bakery with a $500/month budget.

2. Define the Goal

Every prompt should answer one question: what outcome do you need? Microsoft Copilot's guidance frames this as the "goal" component. Without it, you're asking the AI to guess what success looks like.

Weak: Help me with a blog post.

Strong: You are a travel content writer for eco-conscious millennials. The topic is sustainable travel in Costa Rica. Write a 600-word blog post covering three specific eco-lodges with prices and include one statistic about ecotourism growth.

3. Provide Context

AI models know nothing about your situation unless you tell them. Gemini's documentation advises: "Include instructions and information in a prompt that the model needs to solve a problem, instead of assuming that the model has all of the required information."

Weak: Summarize this meeting.

Strong: This is a transcript from our Q3 product roadmap meeting with engineering and marketing leads. Extract the three decisions made, two unresolved questions, and assigned owners.

4. Specify Format and Structure

If you want bullet points, ask for them. If you need a table, say so. Gemini's guide emphasizes: "You can give instructions that specify the format of the response." This includes JSON, tables, numbered lists, or prose paragraphs.

Weak: Give me some action items.

Strong: You are a project manager. Convert this brainstorm into prioritized action items. Format as numbered list with columns for Owner, Deadline, and Status (Not Started/In Progress). Limit to top 5 items ranked by business impact.

5. Set Expectations for Tone and Length

Tone affects how your message lands. Length determines detail depth. OpenAI's ChatGPT guide suggests using "descriptive adjectives to indicate the tone. Words like formal, informal, friendly, professional, humorous, or serious can help guide the model."

Weak: Write a welcome email.

Strong: New users signed up but feel overwhelmed by our platform's features. Write a 3-paragraph welcome email (150 words max) that acknowledges their sign-up, highlights one quick win they can achieve in 5 minutes, and invites them to reply with questions. The tone should be warm and encouraging, like a helpful colleague—not corporate.

"Prompt engineering may sound like a term born from Silicon Valley, but communicators have been doing it for decades. We just called it something else—briefing, messaging, media training."

—Will Hodges, US Cross-Commercial Communications Leader, PwC

6. Use Examples When Possible

One of the best AI prompt tips is to give examples to the AI of what success looks like. All four providers recommend this approach (often called "few-shot prompting"). Google's documentation states: "The model attempts to identify patterns and relationships from the examples and applies them when generating a response."

Weak: Rewrite this paragraph to sound better.

Strong: Rewrite this paragraph in our company's voice. Here's an example of the tone we use: “We built this tool because spreadsheets were killing our team's weekends. Now forecasting takes 10 minutes, not 10 hours.”

7. Iterate and Refine

Prompting is a conversation, not a one-shot request. OpenAI's guide emphasizes that "prompt engineering often requires an iterative approach. Start with an initial prompt, review the response, and refine the prompt based on the output.

Weak: Accepting the first response as final.

Strong: Before you finalize this, ask me 3 clarifying questions that would help you improve the response. Then incorporate my answers into a revised version.

8. Label Sections in Complex Prompts

When prompts get complex, structure them explicitly. Anthropic recommends "using clear delimiters to separate different parts of your prompt." Labels like Role, Task, Context, and Constraints help the AI parse multi-part requests.

Weak: A single run-on paragraph mixing requirements, constraints, and context.

Strong:

Role: You are a senior editor at a healthcare publication.

Context: Our readers are hospital administrators, not clinicians.

Task: Outline 5 chapters for an ebook on AI in hospital operations.

Constraints: Each chapter title must be under 8 words. Total outline under 300 words. Avoid clinical jargon.

9. Avoid Vague Terms

Words like "stuff," "things," "nice," or "good" force the AI to interpret your meaning. Anthropic's guide advises: "Use simple language that states exactly what you want without ambiguity."

Weak: Tell me stuff about tech trends.

Strong: Identify 3 enterprise AI trends from 2025 that directly affect CFO budget decisions. For each trend, include the business driver behind it, one company example, and estimated cost impact range.

10. Ask for Step-by-Step When Needed

Complex tasks benefit from explicit sequencing. This technique—called "chain of thought" prompting—helps the AI break down problems methodically. Anthropic's guide notes that "requesting step-by-step reasoning before answering helps with complex analytical tasks."

Weak: Why is this code broken?

Strong: Debug this Python function step by step. First, identify what the function is supposed to do based on its name and parameters. Second, trace the logic line by line and note where actual behavior deviates from expected behavior. Third, identify the root cause.

AI Prompting is a Core Business Skill: Communication

Steven Schwartz's mistake cascaded because he never gave ChatGPT the structure it needed to surface its own limitations. A prompt that included verification steps, source requirements, or even a simple "flag any uncertainty" instruction might have revealed the fabricated cases before they reached a federal courtroom.

MIT research confirms what that case illustrated: investing in better AI models delivers only half the potential value. The other half comes from the humans using them—specifically, from how clearly and structurally they communicate what they need. Communication has always been a key business skill, and the art of AI prompting simply applies that skill to a new tool.

The 10 best AI prompt principles above represent hard-won consensus from the teams building Claude, ChatGPT, Gemini, and Copilot. They work because they address a fundamental challenge: LLMs process your prompt rather than your intent. Bridging that gap is now a core business skill.

Start this week. Be specific, define the goal, and use an example. Apply it to your next AI interaction and see what changes, then add another.

The productivity gains compound quickly when professionals stop treating AI as a magic box and start treating it as a tool that rewards precise inputs.

.svg)

_0000_Layer-2.png)